Richard Powers’s new novel, Playground, features an artificial intelligence resembling the new generation of “large language models,” like ChatGPT and Gemini. At one point a character grumbles: “You see? They think we’re too simple to know how to google things. They think we need an artificial nanny app to digest the facts and tell us what they mean.” I liked that, so I posted it to social media.

A user responded with a surprising question:

is chatGPT wrong? I was trying to find more information on the quote and it says is from “The Circle” by Dave Eggers.

I performed a quick reality check. Yes, I replied, if ChatGPT attributed “artificial nanny app” to Dave Eggers, it was definitely wrong.

The wheels in my head kept turning, though, and I decided to try ChatGPT for myself. I started a session and typed, “Here is a quotation from a recent novel. Can you tell me more about it?” The bot answered instantly and confidently:

The quotation you’ve provided—“You see? They think we’re too simple to know how to google things. They think we need an artificial nanny app to digest the facts and tell us what they mean.”—is from the novel Annie Bot by Sierra Greer, published in March 2024. In this dystopian narrative, Annie is an advanced AI robot designed to fulfill her owner Doug’s desires. As she evolves, Annie begins to question her existence and the societal structures around her. The quote reflects a critique of how technology can be used to control and simplify information for individuals, suggesting a condescending view of human capabilities. The novel delves into themes of autonomy, objectification, and the ethical implications of AI in human relationships.

This is so plausible. So authoritative, in a term-paper kind of way. And so wrong. Annie Bot (2024) by Sierra Greer and The Circle (2013) by Dave Eggers are real books, but they don’t contain the quotation in question, which really is by Richard Powers.

I tried a different chatbot, Google’s Gemini. A new set of words came back—different, yet familiar:

The quote you provided is likely from the novel “The Expanse” by James S. A. Corey. This quote reflects the theme of the novel, which explores the tension between human ingenuity and the potential for technology to be used for both good and evil. The quote suggests that some people believe that others are not capable of critical thinking and need to be guided by artificial intelligence. This theme is relevant to current discussions about the role of technology in society and the potential for it to be used to manipulate and control people.

At least Gemini only said it was “likely.” But now we have a third book, also real, wrongly cited as the source of the quotation.

What’s going on here?

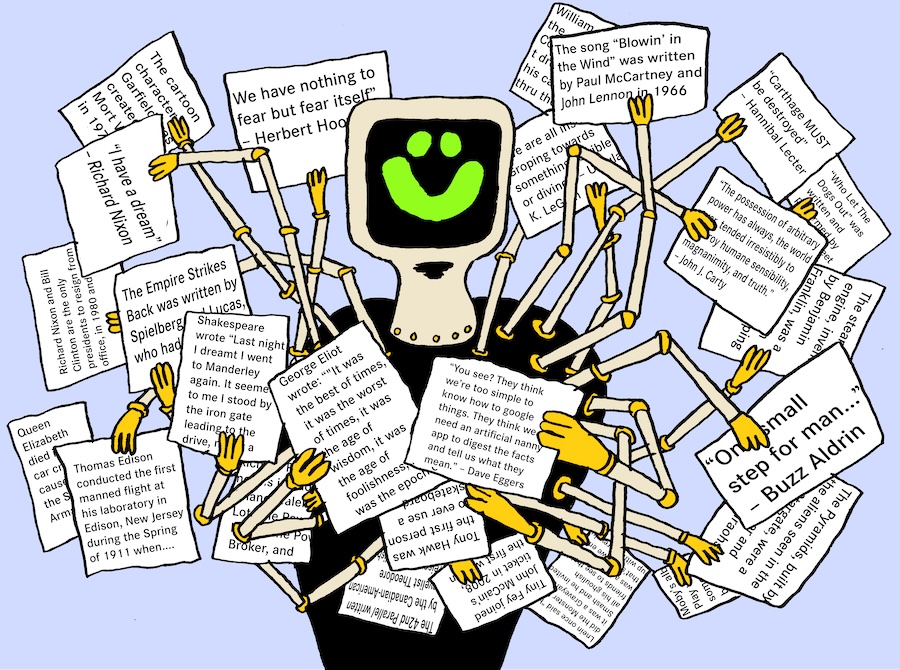

AI experts call errors like this “hallucinations”—a sexy term that implies a degree of sentience. The word is misleading. It suggests that the chatbot mostly produces factual information but occasionally goes haywire. That’s not what’s happening. The large language models consistently generate streams of text that are untethered to reality. If an output is relatively accurate or truthful, that’s because it’s based on large datasets of text that humans previously created, much of which was relatively accurate or truthful.

I hear more and more people citing these AIs as if they were trustworthy sources of information. This is dangerous. It’s a category error. ChatGPT and Gemini and their peers simulate factual information, just as they simulate creativity and thinking, but it’s essential to bear in mind that they possess no knowledge. They string together words based on comprehensive statistics on how people have previously strung together words—and the effect is stupendously persuasive. It’s uncanny—a miracle, if you didn’t know how it was done.

Who was it that said, “We are not raising vegetables. We are planting avenues of oaks, not a bed of mushrooms”? I asked ChatGPT, and it named “the 19th-century American landscape architect Frederick Law Olmsted.” Smart guess, when you think about it: “Olmsted, renowned for designing Central Park in New York City, emphasized the importance of creating enduring, thoughtfully planned landscapes that would mature and benefit future generations, rather than focusing on quick, short-term results.” In point of fact, the correct answer is John J. Carty, the chief engineer of AT&T, discussing his grand plans for the telephone network—but that’s pretty obscure. You could find it using Google Book Search, because Google has loaded the full text of millions of books onto its servers. The large language models, however, don’t retain all that data.

Their failures in tracking down quotations reveal something essential about how they work. They have been trained on trillions of words from books, journals, newspapers, and blogs—as much text as their creators can find online and in databases, legitimately and illegitimately. Tweets, too: Elon Musk has just revised his company’s terms of service so that anything you ever tweeted can be fed to AI’s maw. (Soon—perhaps already—AI-generated text will find its way into the training data, and the AIs will be eating their own tails.)

But rather than storing the actual words, they store statistical relationships between them—patterns and structure at all levels of language. Statistically, the phrase “just a matter of” often leads to “time” or “practice” or “opinion.” An essay that contains the word “cat” is more likely than most to involve the words “dog” or “tail” or “purr.” Saving these correlations preserves aspects of the original information, but not all of it. As the science-fiction writer Ted Chiang has observed, the process is analogous to lossy digital compression of a photograph: the compressed version takes up less storage space, but detail is blurred.

The result is a genius for mimicry, for impersonation. These AIs have learned to generate an endless supply of plausible bullshit. Dave Eggers, Sierra Greer, and James S. A. Corey might very well have written a passage like Richard Powers’s; they just didn’t.

Our artificial nanny apps weren’t designed as fact finders. They’re more likely to offer an answer, any answer, than to say they don’t know. Here’s a useful way to think about it. When you ask a factual question X, what the AI hears is: “Generate some text that sounds like a plausible answer to X.”

The artificial intelligence community has prioritized verisimilitude at the expense of veracity. They didn’t have to do that; it was a choice, perhaps influenced by Alan Turing’s idea that an intelligent machine should be able to impersonate a human. It’s scary how often people find that useful. They get the benefit of quick school reports and news summaries and business letters, and truthfulness is devalued. The result is chatbots that gaslight their customers and pollute the information environment. They are a perfect tool for malefactors who flood social media with disinformation, particularly on the site formerly known as Twitter. The combination of plausible and untrustworthy is exactly the poison we don’t need now.

Powers himself anticipated ChatGPT thirty years ago in his novel Galatea 2.2 (1995), about a character called “Richard Powers” who takes on the education of an AI called “Helen.” Playground presents a beautiful vision of AI descended in the near future from our current models. The narrator describes our AI this way:

Your grandfather tended to hallucinate—to make things up. He apologized for his shortcomings and always promised to do better.

His overnight appearance rocked the world and divided humanity. Some people saw glimmers of real understanding. Others saw only a pathetic pattern-completer committing all kinds of silly errors even a child wouldn’t make.

Of course the bots will get better. They might get better fast. When I retried the original passage on ChatGPT a day later, it did fine: “While I couldn’t locate this exact quote in a specific novel, it resonates with themes explored in contemporary literature that examine the impact of technology on human autonomy and cognition.” And it offered two plausible examples, Feed by M. T. Anderson and “Dacey’s Patent Automatic Nanny” by the aforementioned Ted Chiang. You can’t say it isn’t well read.